#Airflow docker run parameters update#

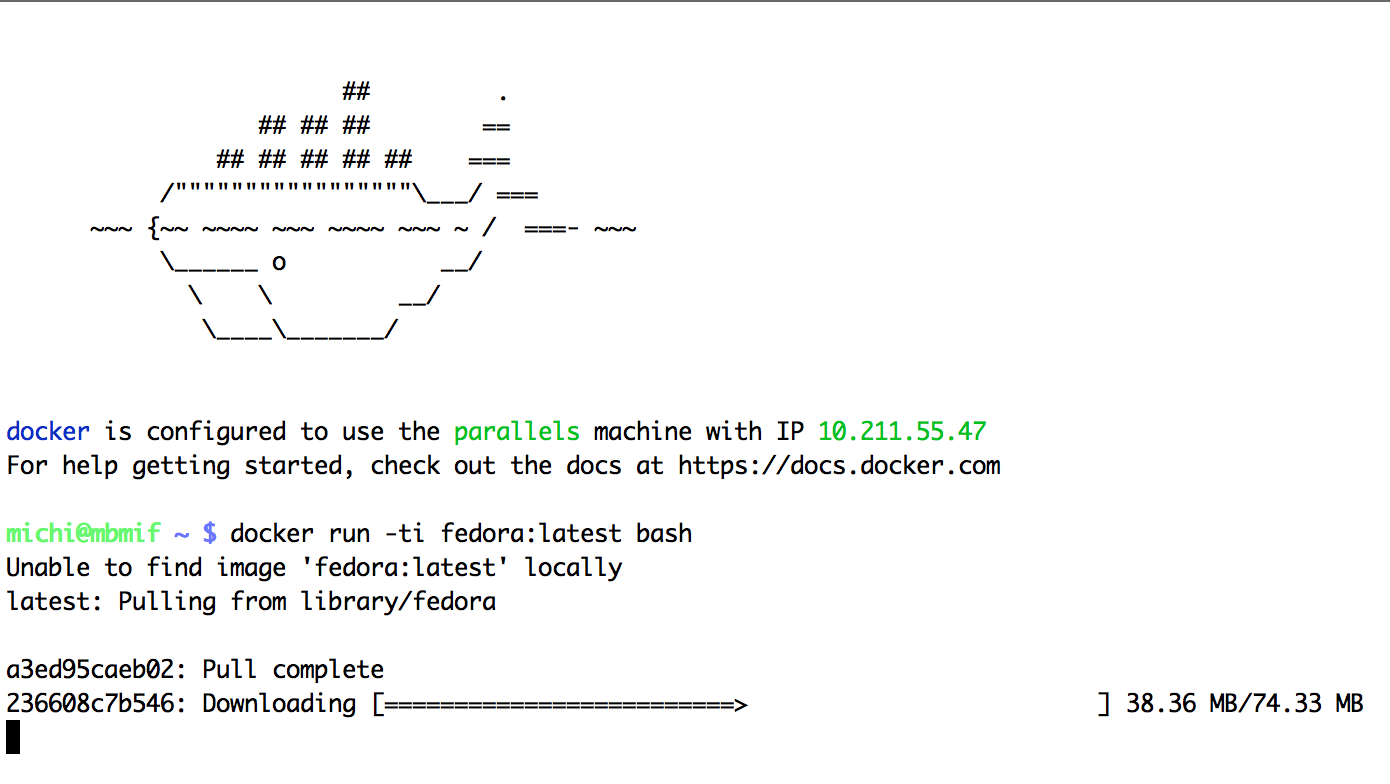

Please update your config before Apache Airflow 3.0.ĭata-pipeline-airflow-worker-1 | FutureWarning,ĭata-pipeline-airflow-worker-1 | airflow command error: the following arguments are required: GROUP_OR_COMMAND, see help above.ĭata-pipeline-airflow-worker-1 | usage: airflow GROUP_OR_COMMAND. Next, the DockerOperator executes a docker run command on the Worker machine with the appropriate arguments (2). Then, if needed, the docker daemon fetches the required Docker image from the Docker registry (3). Again, you dont need to be an Apache Airflow, Docker, or Python expert to create DAGs, well treat DAGs as just another text file. Next, the DockerOperator executes a docker run command on the Worker machine with the appropriate arguments (2). Well use the Apache Airflow DockerOperator to run Apache Hop workflows and pipelines from an embedded container in Apache Airflow. Is it correct to setup debug config to run Airflow's binary directly? When I use airflow worker as interpreter and run in debug mode I gotĭata-pipeline-airflow-worker-1 | /home/airflow/.local/lib/python3.7/site-packages/airflow/configuration.py:360: FutureWarning: The auth_backends setting in has had .session added in the running config, which is needed by the UI. First, Airflow tells a worker to execute the task by scheduling it (1).so if i wanted to run a bash script on the Host machine, and i use a file path to it, how does the task know that the file path is on the host and not insider the container. Which container should I select when creating Python Interpreter? re: when running Airflow on docker, how do you get it to run the Dag/tasks on the Host machine, rather than insider the container.I have tried to follow this article: problematic parts are:

0 kommentar(er)

0 kommentar(er)